There is more and more news about AIs helping creatives and generating impressive ideas and campaigns. Furthermore, they generate images, they “see,” and they analyze.

As a photographer seeking to capture emotion in the abstract, I ask myself: If I see poetry in a reflection, what on earth does a machine see? I am not an engineer; I am a photographer, and I feel an immense curiosity to understand this new “competition” and where it is heading. So, I decided to conduct my own experiment: a face-off between my eye and the algorithm.

The test: Pitting my art against Google’s “eye”

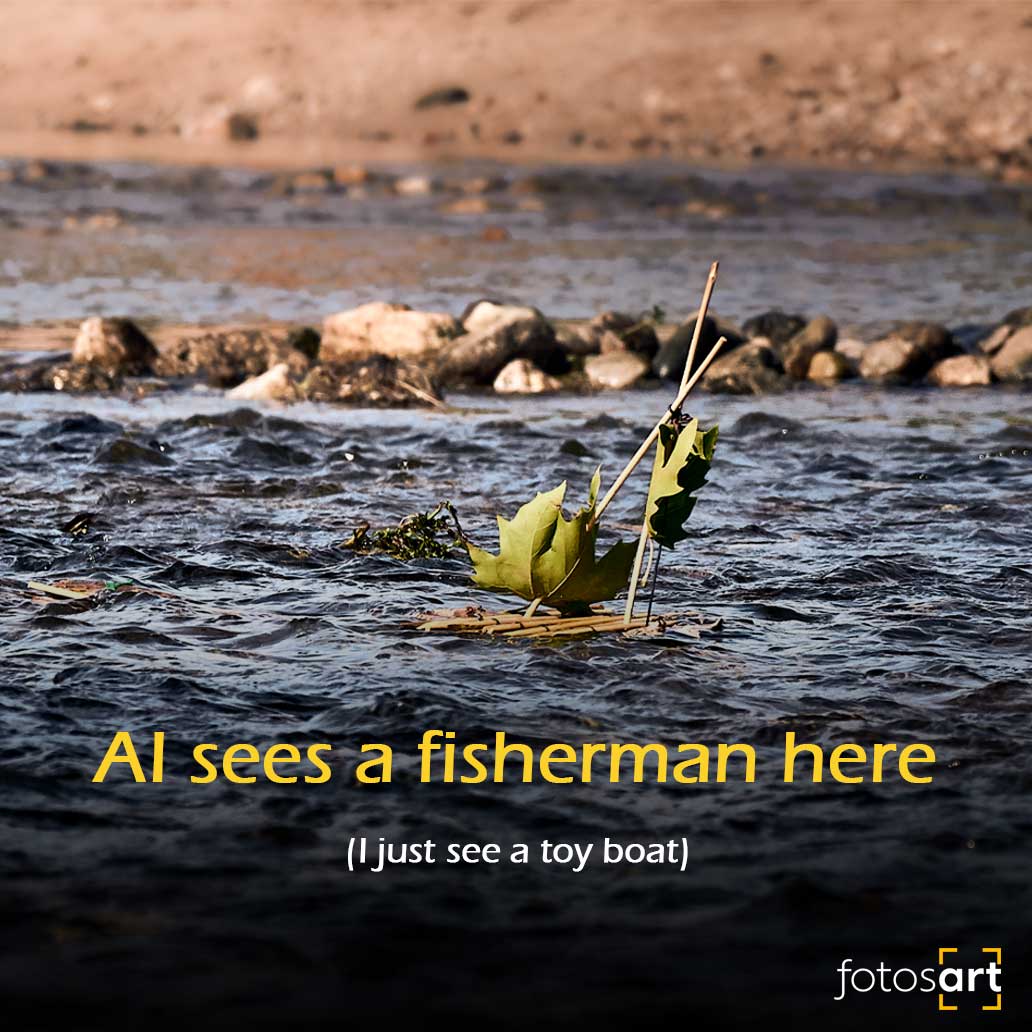

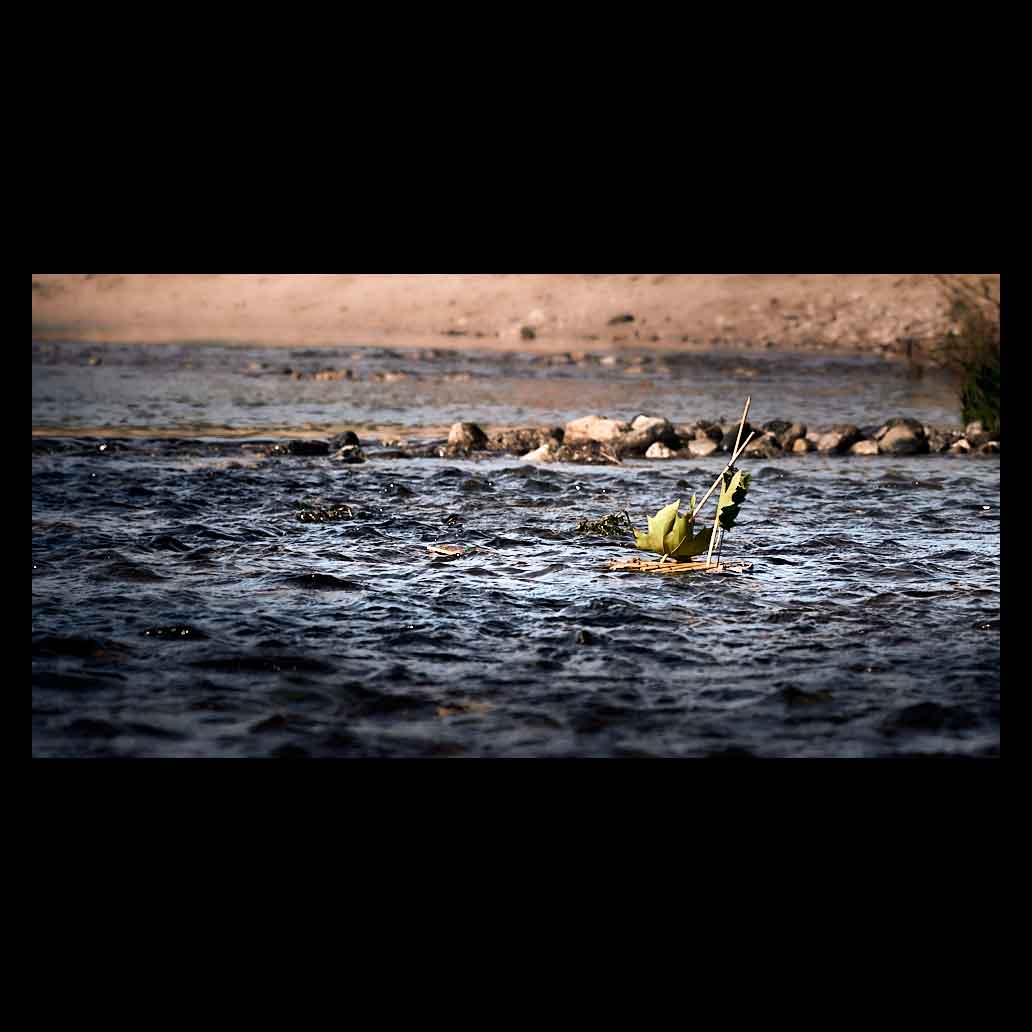

I used one of my recent photographs, one of those images where the main emotional focus blends in with the surroundings, creating something that, for me, tells a story.

I admit I’ve been a bit cheeky in choosing this photo, as it is a wide shot where the main subject is somewhat hidden, yet which any human identifies instantly.

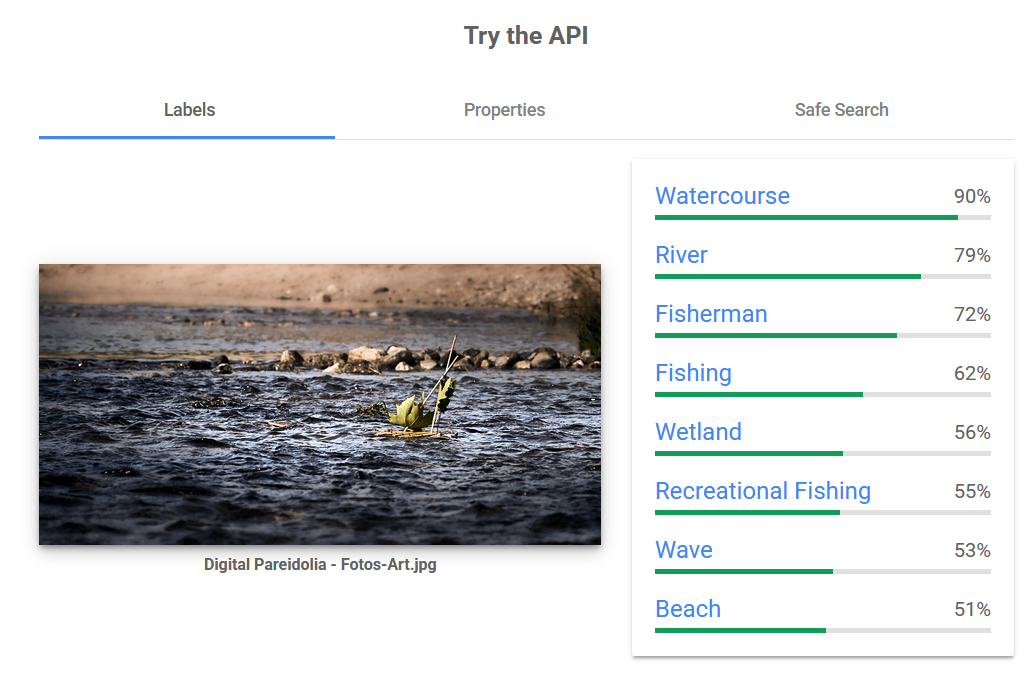

I uploaded it to a computer vision analysis tool (I used the public demo of Google Cloud Vision). I wanted to know how the software tags my work. Honestly, I was expecting terms like “abstract art,” “composition,” or perhaps “painterly texture.”

The result: Digital Pareidolia

What happened left me perplexed and, strangely, relieved.

What the machine saw (The hallucination)

The algorithm, with a surprising 72% confidence, claimed to see something that doesn’t exist:

- Fisherman

- Recreational Fishing

The AI saw the river, detected the small stick serving as a mast, and its statistical logic filled in the blanks by inventing a person. It hallucinated a ghost fisherman. It is unable to detect scale or playful context; for the machine, if there is a river and a stick, there must be fishing involved. It completely fails to grasp the concept of a “toy.”

What I saw (The reality)

There was no one fishing. It was just a tiny boat made of a leaf and a twig, drifting downstream. My intention was to capture the nostalgia of childhood, that useless and beautiful moment of watching something flow. Although not in the frame, a little boy was running along the riverbank to catch it downstream. The AI tried to assign a utility (fishing) to a situation that was pure emotion.

Why this is vital if you use my photos

This small AI error confirmed the true value of the digital assets I offer.

If you are a designer or creative and you license my photos, you aren’t buying a JPG file of “soil.” You are buying a human vision that the machine cannot replicate precisely because it cannot “feel.”

AI seeks to close meaning (telling you what it is). My photography seeks to open it (letting you feel what it is). That ambiguity is what makes an image work in an editorial or web project: it allows the viewer to project their own emotions, without rigid labels.

A pledge of curiosity

This doesn’t end here. I’ve caught the bug. I have decided to turn this into the beginning of “Project Pareidolia.”

I am going to keep challenging the algorithms with my new works periodically. I want to track if one day the machine learns to see the “melancholy” in a chipped wall or if it will continue to see only data. Until then, I will keep going out with my camera to capture that which, fortunately, remains invisible to code.